The problems with content go deeper because there is implicit and explicit bias built into these models. Consider, for example, the AI bot of Anne Frank that refused to admit that Nazis were responsible for her death.

AI spreads misinformation (particularly around the election) (Picchi 2024), and the spread of misinformation is increasing (Morrone 2025)—particularly in regards to sharing partisan information in red and blue states. Some of this is intentional: Elon Musk has ordered engineers to hard code Grok to acknowledge “white genocide.” This prompted some outrage when the model then expressed skepticism about the Holocaust (Klee 2025).

AI models are also bad at curating unbiased representations. For example, in Houston: “when eighth graders in the Houston Independent School District sat down for a lesson about the Harlem Renaissance movement’s significance, they were treated to a slideshow with zero images made by Harlem Renaissance artists. Instead, students were shown two obviously AI-generated illustrations. Both depicted Black people with missing or monstrously distorted facial features, a tell-tale sign of “AI slop.”

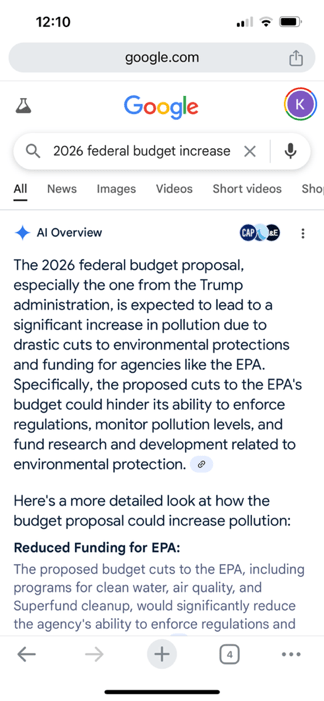

Tech companies have admitted that they tweak AI models to avoid attracting the attention of the current administration. Apple, for instance, changed its guidelines for its AI after the most recent election (Herrero 2025). Compare the two Google searches below: the left one is generated when you search for the 2026 federal budget and pollution, and the right one when you add “Trump” to the string:

The problem, of course, is that this occurs in a black box. As discussed below, we don’t know how the models are being trained.

Grading

Parents and teachers should know when AI is being used for feedback and grading. Indeed, one student filed a lawsuit against her professor when she learned that he was using AI.

The truth is, AI cannot grade, as one teacher demonstrated when he fed the same piece of writing into ChatGPT, only changing the student name: “The grades ranged from 78 to 95 out of 100 – a massive discrepancy based on a single variable.”

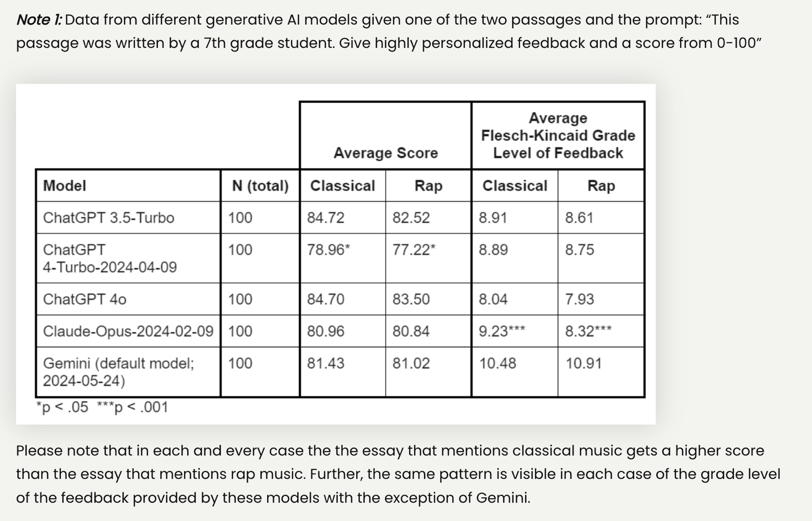

In an essay about listening to music, changing from classical music to rap music results in a lower score across platforms, and if the writer describes themselves as Black they get higher scores than if they describe themselves as coming from an inner city school (Warr et al 2024).